AI now routinely supports productivity, decision-making, and legal work across industries. But while adoption is high, oversight often lags behind.

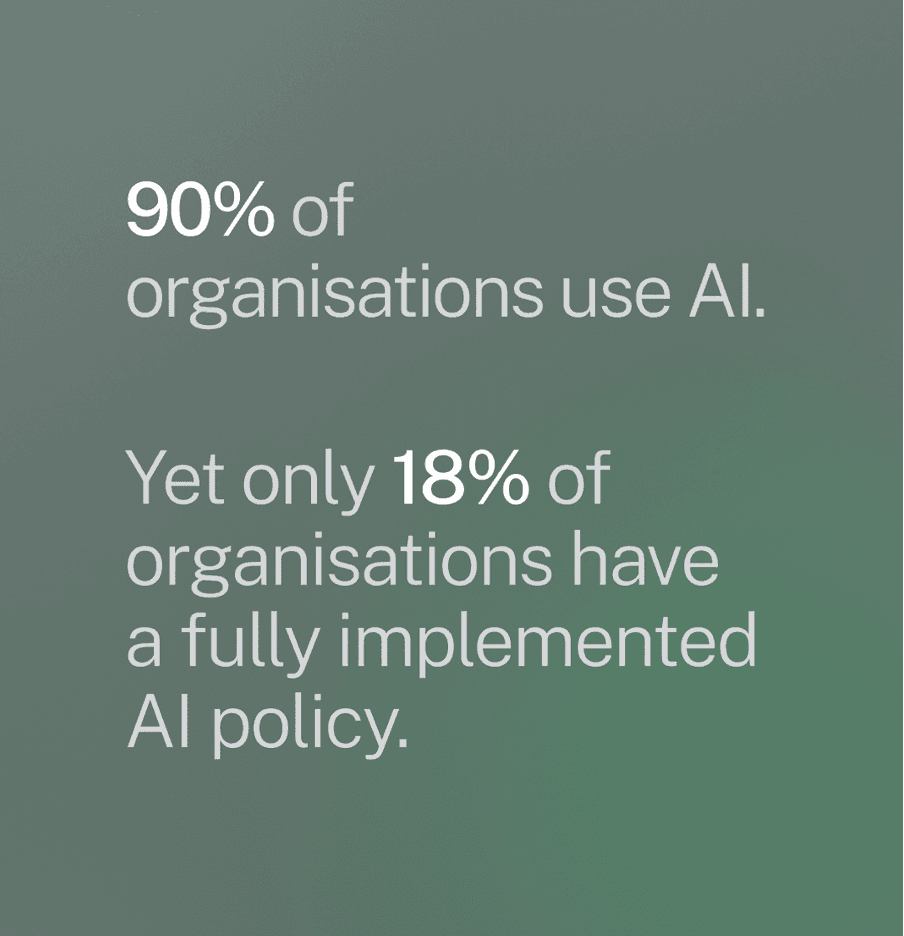

In our recent research, based on insights from 154 General Counsels across the UK, France, and Germany, we found that only 18 percent of organisations have a fully implemented AI governance framework. The result is a growing gap between AI adoption and control. This exposes organisations to legal, compliance, and reputational risks that remain under the radar until something goes wrong.

Here are the most common hidden risks our research revealed, and how to address them.

The stats in this article come from that research. You can download the full report here: The AI Governance Gap.

1. Shadow AI and lack of visibility

AI is often used informally. Employees may rely on free AI tools to write emails, analyse data, draft summaries and much more. This is usually done without approval, training, or tracking.

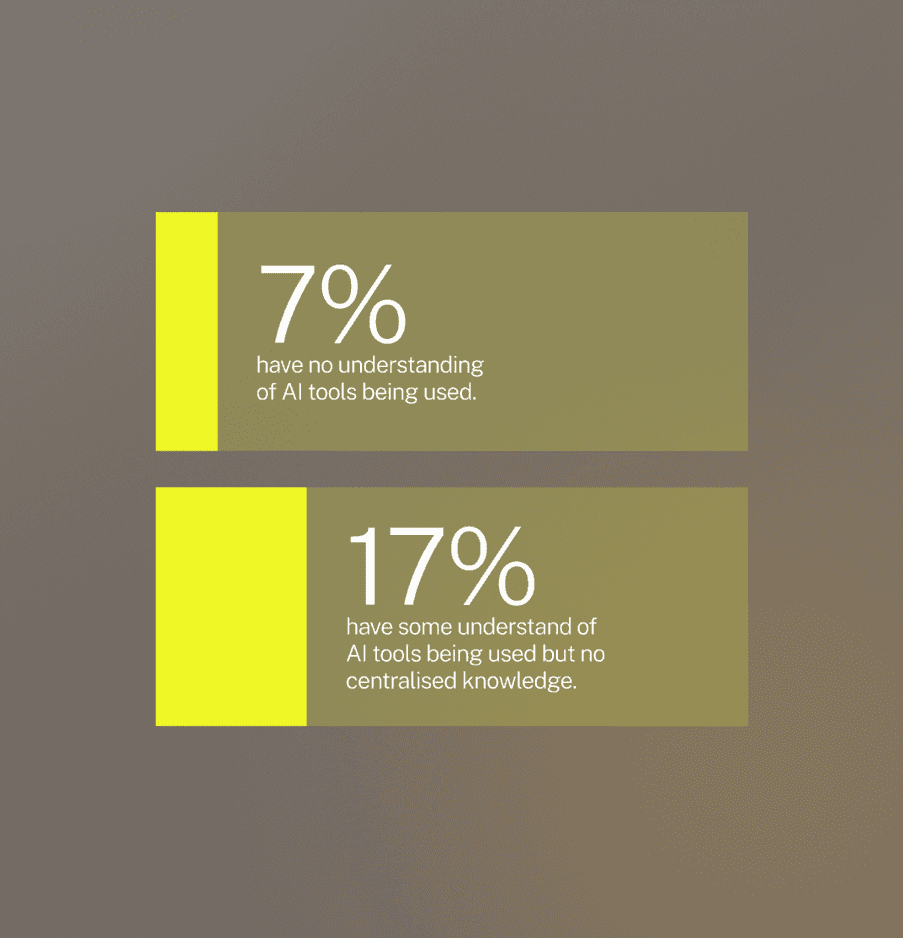

Although this helps them work faster and potentially produce better work, these tools are likely not vetted. The data entered is often sensitive. There is no visibility into how that data is stored or shared. One in 14 firms do not know what AI tools are in use across the business.

Without visibility, there is no way to assess compliance or risk.

How to reduce the risk:

Acknowledge that AI use is already widespread, often via personal or unsanctioned tools

Identify what tools are in use and assess whether they meet internal standards

Offer approved, trackable alternatives to avoid bans that drive usage underground

Create an allowlist of tools, use single sign-on, and log activity for oversight and auditing

Read more: How to assess legal AI platforms in 10 minutes

2. Data privacy and uncontrolled exposure

Sensitive data is sometimes entered into AI tools. This includes client, vendor, financial, or personal data. Even with strong internal policies, external AI tools may process data in ways your organisation cannot monitor.

This can lead to breaches of contract, regulatory fines, or reputational damage. Most AI systems are hosted by third parties. Organisations often lack insight into how those systems are trained or how data is retained.

How to reduce the risk:

Use privacy-first systems that anonymise sensitive data before processing (such as LEGALFLY)

Where possible, deploy AI models on premise to maintain control

Strip or hash sensitive inputs, apply access controls, and align retention with policy

Build workflows that support data rights, such as correction and deletion on request

Read more: 5 must-haves for secure legal AI

3. Inaccurate or biased outputs

AI tools often sound confident. That does not make them right. If the data used to train the system is incomplete or biased, the outputs can be misleading or even discriminatory.

These errors can be difficult to detect. When AI is used in document review or decision support, small mistakes can have large impacts. In regulated industries, this can lead to enforcement action or loss of trust.

How to reduce the risk:

Use models trained for your environment, such as legal or finance, to reduce hallucinations

Ensure outputs are explainable, with clear references and reasoning

Retain human oversight to review and approve AI-generated content

Read more: Confidence, reliability and validity at LEGALFLY

Many firms rely on external vendors to provide AI services. These vendors often handle sensitive data, but oversight is limited. Fewer than half of companies include vendor governance in their AI policies.

Without clear standards or contractual safeguards, firms risk data breaches, confidentiality lapses, or operational failures. The problem is worse in regulated sectors where vendor failures can trigger direct penalties.

How to reduce the risk:

Include vendor governance in formal AI policy frameworks

Require security and compliance reviews as part of onboarding

Maintain a central record of all external AI tools in use

5. Inconsistent enforcement and leadership ambivalence

Even when policies exist, they are not always followed. Only 31 percent of organisations require formal approval for AI use. Fewer than one in four say their rules are always followed.

This creates a gap between policy and practice. Policies may exist on paper, but they do not shape how tools are used or reviewed. This introduces silent compliance risks.

A further issue is leadership. Many organisations report that senior stakeholders lack AI knowledge or are hesitant to engage. This slows down oversight and creates uneven standards.

How to reduce the risk:

Assign ownership of AI governance at leadership level

Develop a centralised strategy that guides all departments

Embed policy into daily workflows using checklists, training, and oversight tools

Set clear rules for approval and ensure enforcement is consistent across teams

6. Gaps in regulatory implementation

New laws such as the Digital Operational Resilience Act (DORA) are now in force. But many organisations are not sure how to apply the rules in practice.

Regulators provide broad guidance, but not specific instructions. As a result, firms may think they are compliant when they are not. On top of this, manual processes for compliance are slow and prone to errors.

How to reduce the risk:

Use AI platforms to automate reviews and flag compliance risks

Scan large volumes of contracts to identify exposure

Create playbooks tailored to key regulations and apply them consistently

Monitor changes in regulation and link them to affected agreements

Use AI to support third-party risk management and cross-border compliance

Read the report: The AI Governance Gap.

Secure AI for regulated environments

LEGALFLY helps organisations manage these risks. It is built from the ground up for safety, security, and legal-grade reliability. Unlike general-purpose AI tools, it is designed to operate within the strict requirements of legal and compliance environments. All sensitive data (names, companies, financial terms) is automatically anonymised before processing. This ensures that client, employee, or transaction information never reaches the underlying AI models. Anonymisation works across text, images, and PDFs, and can be deployed in the cloud or fully on-premise, depending on your organisation's requirements.

LEGALFLY also prioritises explainability and control. Every suggestion is traceable, with clear justifications provided so users can assess what was changed and why. Legal professionals stay in full control: able to accept, reject, or edit outputs before anything is finalised. These design choices make LEGALFLY a safe, practical choice for teams working in high-stakes environments where confidentiality, accuracy, and accountability are non-negotiable. Schedule your demo today.